Indian Journal of Science and Technology

DOI: 10.17485/IJST/v16i47.2643

Year: 2023, Volume: 16, Issue: 47, Pages: 4525-4546

Review Article

Miranda Surya Prakash1*, S N Devananda2

1Research Scholar, Department of ECE, PES Institute of Technology and Management, Visvesvaraya Technological University, Shimogha, Karnataka, India

2Professor, Department of ECE, PES Institute of Technology and Management, Visvesvaraya Technological University, Shimogha, Karnataka, India

*Corresponding Author

Email: [email protected]

Received Date:18 October 2023, Accepted Date:28 October 2023, Published Date:31 December 2023

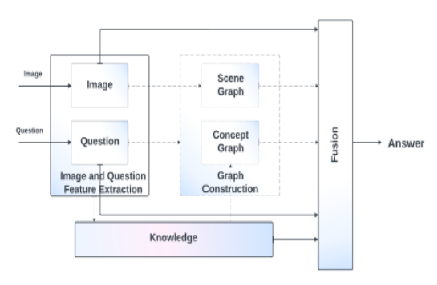

Objectives: Multimodal deep learning, incorporating images, text, videos, speech, and acoustic signals, has grown significantly. This article aims to explore the untapped possibilities of multimodal deep learning in Visual Question Answering (VQA) and address a research gap in the development of effective techniques for comprehensive image feature extraction. Methods: This article provides a comprehensive overview of VQA and the associated challenges. It emphasizes the need for an extensive representation of images in VQA and pinpoints the specific research gap pertaining to image feature extraction and highlights the fundamental concepts of VQA, the challenges faced, different approaches and applications used for VQA tasks. A substantial portion of this review is devoted to investigating recent advancements in image feature extraction techniques. Findings: Most existing VQA research predominantly emphasizes the accurate matching of answers to given questions, often overlooking the necessity for a comprehensive representation of images. These models primarily rely on question content analysis while underemphasizing image understanding or sometimes neglect image examination entirely. There is also a tendency in multimodal systems to neglect or overemphasize one modality, notably the visual one, which challenges genuine multimodal integration. This article reveals that there is limited benchmarking for image feature extraction techniques. Evaluating the quality of extracted image features is crucial for VQA tasks. Novelty: While many VQA studies have primarily concentrated on the accuracy of answers to questions, this review emphasizes the importance of comprehensive image representation. The paper explores recent advances in Capsules Networks (CapsNets) and Vision Transformers (ViTs) as alternatives to traditional Convolutional Neural Networks (CNNs), for development of more effective image feature extraction techniques which can help to address the limitations of existing VQA models that focus primarily on question content analysis.

Keywords: Feature Extraction, Visual Question Answering, Multimodal Deep Learning, Capsule Networks, Vision Transformer, Datasets

© 2023 Prakash & Devananda. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited. Published By Indian Society for Education and Environment (iSee)

Subscribe now for latest articles and news.