Indian Journal of Science and Technology

Year: 2023, Volume: 16, Issue: 7, Pages: 460-467

Original Article

Rayeesa Mehmood1, Rumaan Bashir1*, Kaiser J Giri1

1Department of Computer Science, Islamic University of Science &Technology, Kashmir, Jammu and Kashmir, India

*Corresponding Author

Email: [email protected]

Received Date:29 November 2022, Accepted Date:17 January 2023, Published Date:17 February 2023

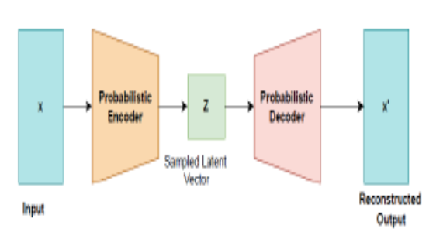

Objectives: To provide insight into deep generative models and review the most prominent and efficient deep generative models, including Variational Auto-encoder (VAE) and Generative Adversarial Networks (GANs). Methods: We provide a comprehensive overview of VAEs and GANs along with their advantages and disadvantages. This paper also surveys the recently introduced Attention-based GANs and the most recently introduced Transformer based GANs. Findings: GANs have been intensively researched because of their significant advantages over VAE. Furthermore, GANs are powerful generative models that have been widely employed in a variety of fields. Though GANs have a number of advantages over VAEs, but, despite their immense popularity and success, training GANs is still difficult and has experienced a lot of setbacks. These failures include mode collapse, where the generator produces the same set of outputs for various inputs, ultimately resulting in the loss of diversity; non-convergence due to oscillatory and diverging behaviors of the generator and discriminator during the training phase; and vanishing or exploding gradients, where learning either ceases to occur or occurs very slowly. Recently, some attention-based GANs and Transformer-based GANs have also been proposed for high-fidelity image generation. Novelty: Unlike previous survey articles, which often focus on all DGMs and dive into their complicated aspects, this work focuses on the most prominent DGMs, VAEs, and GANs and provides a theoretical understanding of them. Furthermore, because GAN is now the most extensively used DGM being studied by the academic community, the literature on it needs to be explored more. Moreover, while numerous articles on GANs are available, none have analyzed the most recent attention-based GANs and Transformer-based GANs. So, in this study, we review the recently introduced attention-based GANs and Transformer-based GANs, the literature related to which has not been reviewed by any survey paper.

Keywords: Variational Autoencoder; Generative Adversarial Networks; Autoencoder; Transformer; Self-Attention

© 2023 Mehmood et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited. Published By Indian Society for Education and Environment (iSee)

Subscribe now for latest articles and news.