Indian Journal of Science and Technology

DOI: 10.17485/IJST/v17i21.1035

Year: 2024, Volume: 17, Issue: 21, Pages: 2218-2231

Original Article

Lavanya Sanapala1*, Lakshmeeswari Gondi2

1Research Scholar, Department of CSE, GITAM School of Technology, GITAM Deemed to be University, India

2Associate Professor, Department of CSE, GITAM School of Technology, GITAM Deemed to be University, India

*Corresponding Author

Email: [email protected]

Received Date:02 April 2024, Accepted Date:12 May 2024, Published Date:29 May 2024

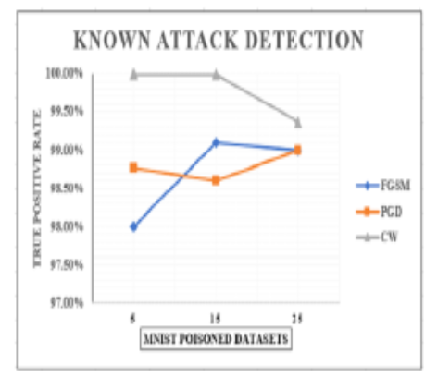

Objectives: This research paper aims to develop a novel method for identifying gradient-based data poisoning attacks on industrial applications like autonomous vehicles and intelligent healthcare systems relying on machine learning and deep learning techniques. These algorithms performs well only if they are trained on good quality dataset. However, the ML models are prone to data poisoning attacks, targeting the training dataset, manipulate its input samples such that the machine learning algorithm gets confused and produces wrong predictions. The current detection techniques are effective to detect known attacks and lack generalized detection to unknown attacks. To address this issue, this paper aims to integrate security elements within the machine learning framework, guaranteeing effective identification and mitigation of known and unknown threats and achieve generalized detection. Methods: ML Filter, a unique attack detection approach integrates ML-Filter Detection Algorithm and the Statistical Perturbation Bounds Identification Algorithm to determine the given dataset is poisoned or not. DBSCAN algorithm is used to divide the dataset into several smaller subsets and perform algorithmic analysis for detection. The performance of the proposed method is evaluated in terms of True positive rate and significance test accuracy. Findings: The probability distribution differences between original and poisoned datasets vary with change in perturbation size rather than the datasets and ML models use for application. This finding lead to determine the perturbation bounds using statistical pairwise distance metrics and corresponding significance tests computed on the results. ML Filter demonstrates a high detection rate of 99.63% for known attacks and achieves a generalized detection accuracy of 98% for unknown attacks. Novelty: A secured ML architecture and a unique statistical detection approach ML-Filter, effectively detect data poisoning attacks, demonstrating significant advancements in detecting both known and unknown threats in industrial applications utilizing machine learning and deep learning algorithms.

Keywords: Privacy and security, Adversarial machine learning, Secured ML Architecture, ML-Filter, Statistical Perturbation Bounds Identification Algorithm

© 2024 Sanapala & Gondi. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited. Published By Indian Society for Education and Environment (iSee)

Subscribe now for latest articles and news.